Advanced Computing, Mathematics and Data

Research Highlights

August 2018

Automating Expertise

New training approach teaches a deep learning model to learn expert knowledge

The Achilles’ heel of modern deep learning research is the need for large labeled datasets. This situation is exacerbated as acquiring labels can be expensive and time-consuming, such as in chemical sciences. While using deep learning algorithms to help computers “learn” from data without explicitly programming in expert knowledge has improved predictions of novel chemical properties, the lack of usable data to train such models is a huge impediment. To remedy this, a research team from Pacific Northwest National Laboratory has proposed a better approach via ChemNet. Beyond their initial findings, ChemNet also has potential applications in other domains with similar data challenges.

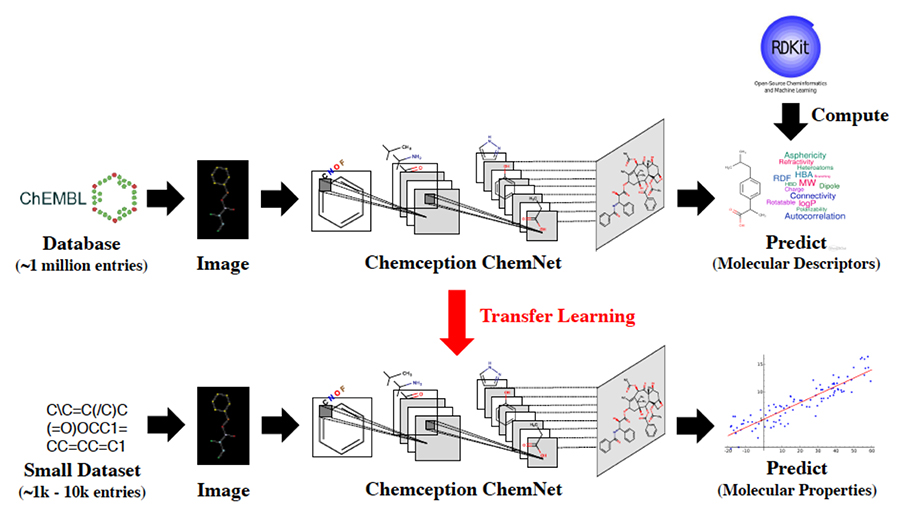

Schematic of ChemNet’s training process that combines weak supervision with transfer learning. Enlarge Image.

Schematic of ChemNet’s training process that combines weak supervision with transfer learning. Enlarge Image.

ChemNet employs a new training approach that learns expert knowledge from large, initially unlabeled databases and outperforms current state-of-the-art supervised learning methods. The team will present its work on ChemNet during the Applied Data Science Track Session on Aug. 21, 2018 (poster presentation) and Aug. 23, 2018 (oral presentation) as part of the 24th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD2018) in London.

Weak Supervision, Strong Possibilities

Large, labeled datasets on the order of millions of entries have fueled deep learning, impacting many fields, particularly in computer vision and language translation. However, some fields, such as chemistry, tend toward small and fragmented data. This lack of labeled data is a bottleneck for deep learning.

Led by Garrett Goh, a scientist with PNNL’s Data Sciences group and a previous Linus Pauling Fellow, the team developed an approach for integrating rule-based labels obtained from prior feature engineering research to generate relatively inexpensive labels for performing weak supervised learning.

The initial weak supervised learning pre-training step constructs predictive models by learning from these alternate labels. When combined with transfer learning, which takes a trained model on one task and uses it for another, this training approach allows the network to first learn representations about chemical rules. The expert “knowledge” that it learns during pre-training puts the neural network in a better position to learn new, more complex chemical labels—even in the absence of big data. The network achieves this by learning domain-relevant and hierarchically related representations that it can leverage to model more sophisticated chemical properties.

In essence, ChemNet imitates the way human experts learn, first by learning about basic concepts then building on them to explain and understand increasingly complex phenomena.

“Using our distinct weak supervised learning approach that uses rule-based labels, ChemNet could be fine-tuned on much smaller datasets to predict unrelated and novel chemical properties that can be applied in areas such as pharmaceuticals and materials research,” Goh said. “More importantly, ChemNet was able to develop chemically relevant internal representations that allowed it to outperform other models trained via the usual supervised methods.”

Effective Across Architectures

At KDD2018, the team will showcase ChemNet’s overall performance, which they examined using convolutional neural networks (CNNs) with image data and recurrent neural network (RNN) models using text data. ChemNet consistently performed better when benchmarked against earlier CNN models that did not employ transfer learning, such as Chemception.

In their model evaluation, the team used three datasets from the MoleculeNet benchmark, Tox21, HIV, and FreeSolv for predicting toxicity, activity, and solvation free energy, respectively. As with the CNN models, the team assessed ChemNet’s performance on an RNN model. ChemNet had improved accuracy when compared to earlier RNN models that did not use transfer learning, such as SMILES2vec.

“These experiments provided a gauge to examine ChemNet’s ability to generalize and predict unseen chemical properties and showed that our training approach can work effectively across network architectures and with diverse data modalities,” Goh said.

Lessons Learned

As a result of the fine-tuning experiments, the team observed that ChemNet developed representations that could be reused and generalized for other chemical tasks. Basically, the network’s lower layers learn representations akin to simpler concepts in the technical domain. This helps the model to learn more sophisticated phenomena and use them as building blocks to describe and explain more complicated developments. This observation led the team to believe that using rule-based weak supervised and transfer learning methods has the potential for simulating the conventional learning of a domain expert without explicitly programming in domain-specific rules.

“The design behind our rule-based weak supervised learning approach may be adaptable to other scientific and engineering applications, especially where existing rule-based models can be used to generate data for training specific ‘domain-expert’ neural networks,” Goh added.

Again, the team will share their work at KDD2018 conference as part of the Applied Data Science Track session. KDD2018 serves as the premier interdisciplinary conference that unites researchers and practitioners from data science, data mining, knowledge discovery, large-scale data analytics, and big data. Now in its 24th year, KKD2018 will be held August 19-23 at ExCeL, the international exhibition center in East London.

Funding:

This work was supported in part by the Linus Pauling Distinguished Postdoctoral Fellowship and Deep Learning for Scientific Discovery Agile Investment Laboratory Directed Research and Development programs at PNNL.

Reference:

- Goh GB, C Siegel,* A Vishnu,** and NO Hodas. 2018. “Using Rule-Based Labels for Weak Supervised Learning: A ChemNet for Transferable Chemical Property Prediction.” To be presented at the 24th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD2018), August 19-23, 2018, London, UK. Available online at: arXiv:1712.02734. (*now at Cray Inc.; **now at AMD.)