PNNL Team Wins Best Paper at Two Conferences

Papers provide in-depth analysis into online news sharing behaviors and how they can be used to explain the performance and biases of AI models

A team of PNNL researchers looking at how to evaluate robustness and accountability, fairness, and transparency of artificial intelligence (AI) models used to detect and quantify deceptive content online, recently received the best paper award at two separate conferences for their work.

(Composite image by Shannon Colson | Pacific Northwest National Laboratory)

Accountability and fairness are hot topics with online news sharing. However, studying how news is shared can be challenging due to the massive volume of content submitted by millions of users across thousands of communities every day. A team of Pacific Northwest National Laboratory (PNNL) researchers are working on a project called Fair, Robust, and Transparent Neural Models to Human Perceptions of Deception (RAFT), looking at how to evaluate robustness and accountability, fairness, and transparency of artificial intelligence (AI) models used to detect and quantify deceptive content online.

The team recently received the best paper award at two separate conferences for their work.

“It was really exciting to get two awards in the same week,” said Maria Glenski, a data scientist at PNNL. “It’s always an honor to be recognized for doing great research and contributing to the field.”

Most recently the team’s paper “Political Bias and Factualness in News Sharing across more than 100,000 Online Communities” was named the best paper in the analysis category at the 2021 International AAAI Conference on Web and Social Media (ICWSM). This is a premier conference for computational social science and the top international conference for social media research.

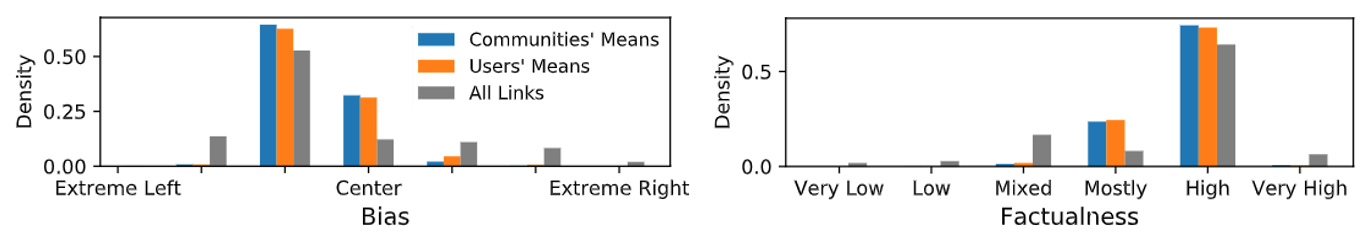

This paper examines the largest study of news sharing on reddit to date, analyzing more than 550 million links spanning four years. The team used non-partisan news source ratings from Media Bias/Fact Check to annotate links to news sources with their political bias and factualness.

“We looked into how the level of factualness or the type of bias of news sources across different communities differs,” said Glenski. “Basically, what type of news content is submitted and interacted with.”

This allowed the team to analyze the different trends and behaviors of users.

“We saw that there is more center or center left content than there is on extremes of either end,” said Glenski.

Additionally, the team took home the best paper at the Natural Language Processing (NLP) for Internet Freedom: Censorship, Disinformation, and Propaganda (NLP4IF) Workshop during the North American Chapter of the Association for Computational Linguistics (NAACL) 2021 conference. This is one of the premier conferences for NLP. The paper “Leveraging Community and Author Context to Explain the Performance and Bias of Text-Based Deception Detection Models” evaluated how an AI model that classifies whether social news posts are linked to credible or deceptive news sources performs in context of the author and community in which it was posted.

“The ICWSM paper is a foundation for subsequent analysis. We investigated 'what is happening on the platform? What are the differences in communities from view of what types of news are submitted?'” said Glenski. “If we have deception detection models that we are applying to these communities, we can use these characteristics to evaluate model behavior in context and identify or explain model biases.”

In the NLP4IF paper, the team explains the bias of the AI model and its differences in performance across communities or authors using the characteristics identified by the ICWSM paper. The team’s findings show a bias of higher performance for posts submitted by authors who regularly submit low factual or deceptive content or posts that receive greater acceptance (higher ratings) within communities.

“The impact of this work will hopefully prompt other researchers to think of areas that we wouldn’t have,” said Glenski. “It opens up new directions for analyses to better account for the context of content in different online communities.”

Published: August 16, 2021